Introduction

Following our last LinkedIn poll, the most voted option was AWS CloudTrail and as promised, we will continue our blog series to demonstrate how we help our customers reduce their Splunk cost using Cribl LogStream.

AWS CloudTrail

AWS CloudTrail services enable you to manage governance, compliance, operational and risk auditing requirements of AWS accounts as CloudTrail events cover API and non-API calls made through the AWS Management Console, AWS CLIs, AWS SDKs, and other AWS services. These logs offer enhanced visibility and better insights into user and resource activities across AWS infrastructure and serve as a valuable intelligence source for security investigations.

CloudTrail records three types of events:

- Management events / Control plane operations: Provides details about management activities performed on an AWS account’s resources; logged by CloudTrail by default.

- Data events / Data plane operations: Logs resource operations conducted on or within a resource.

- CloudTrail Insights events: Records unusual API call rates or error rate activities.

Best practice requires every organisation to enable CloudTrail Logs to be effectively queried either proactively as a countermeasure or in response to an incident. But given the breadth and depth of the logs, inspecting CloudTrail events can become challenging. This is why it is common to integrate SIEM tools for real-time monitoring and analysis to help respond proactively to security incidents. However, as time progresses, CloudTrail logs will be laden with noisy, non-security related events, and as a result, licence charges of these analytical platforms could soar, which dissuade organisations from taking full advantage of CloudTrail’s potentials.

This blog will demonstrate how your organisation can use Cribl LogStream to formulate your CloudTrail for more effective use of Splunk, helping reduce your licence, infrastructure and storage cost whilst ensuring an improved security posture.

Why Cribl

Cribl enables observability by giving you the power to make choices that best serve your business, without the negative tradeoffs. As your goals evolve, you have the flexibility to make new choices including new tools and destinations.

At Leo CybSec, we believe in the value Cribl can bring to our customers, which is why we deliver here Splunk-based evidence on Cribl capability to reduce Splunk licensing while maintaining logs fidelity for a variety of noisy log sources that cause headache and sleepless nights to Splunk admins.

Ingesting AWS CloudTrail Logs

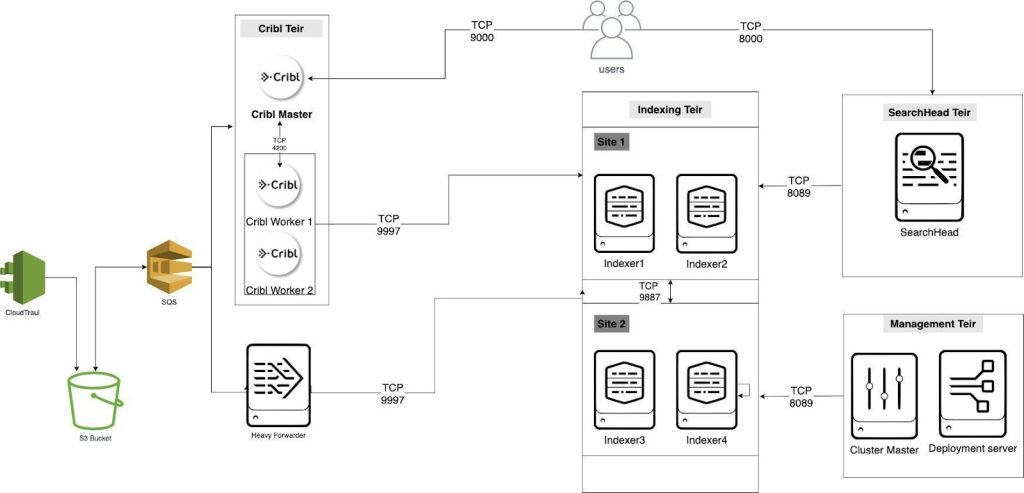

Our lab consists of 3 tiers: AWS, Cribl and Splunk.

AWS:

- Configure SQS-based S3 input for CloudTrail delivery as per AWS documentations

- Set up a dedicated S3 Bucket which will host the CloudTrail log files

- Set up an SQS with sufficient permissions to be used for S3 notifications

- Configure S3 notifications to SQS

Our lab is hosted on AWS and consists of the below systems:

| Server role | IP |

| Cribl Worker 1 | 172.31.70.181 |

| Cribl Worker 1 | 172.31.66.108 |

| Splunk Indexer 1 | 172.31.66.108 |

| Splunk Indexer 2 | 172.31.72.157 |

| Splunk Indexer 3 | 172.31.72.93 |

| Splunk Indexer 4 | 172.31.73.235 |

| Splunk Cluster Master | 172.31.69.131 |

| Heavy Forwarder | 172.31.64.212 |

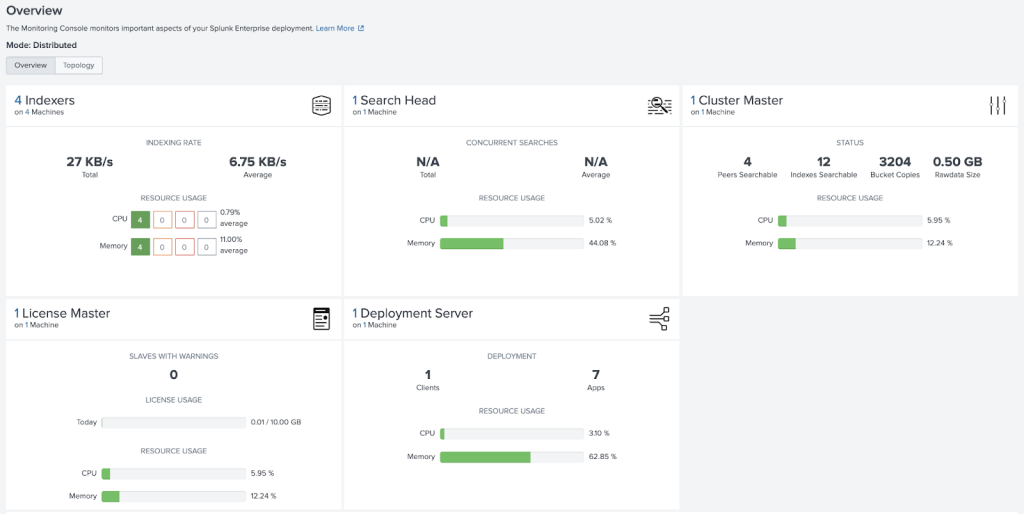

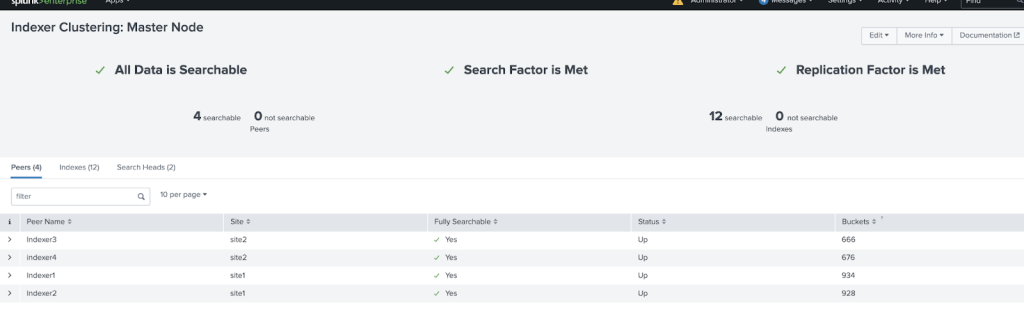

The Splunk Architecture

A multi-site Splunk deployment with 4 Indexers and Index Discovery enabled

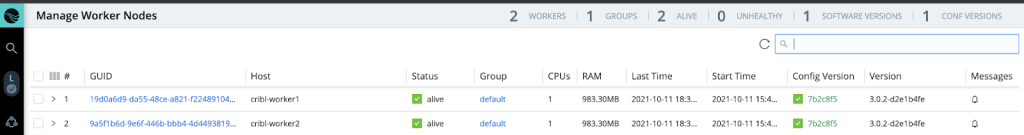

Cribl LogStream Architecture

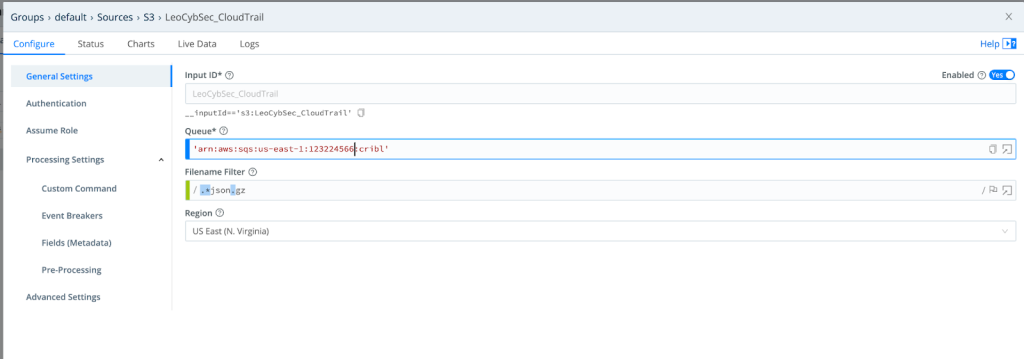

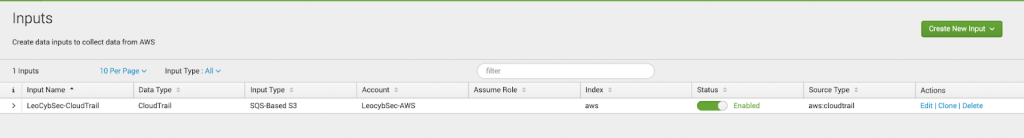

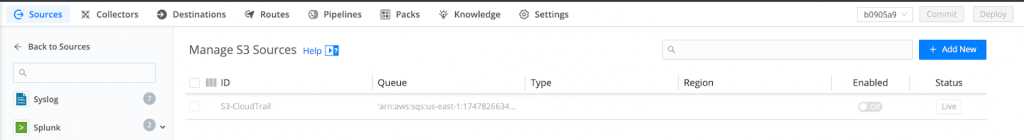

Enabled Source For S3 Using SQS

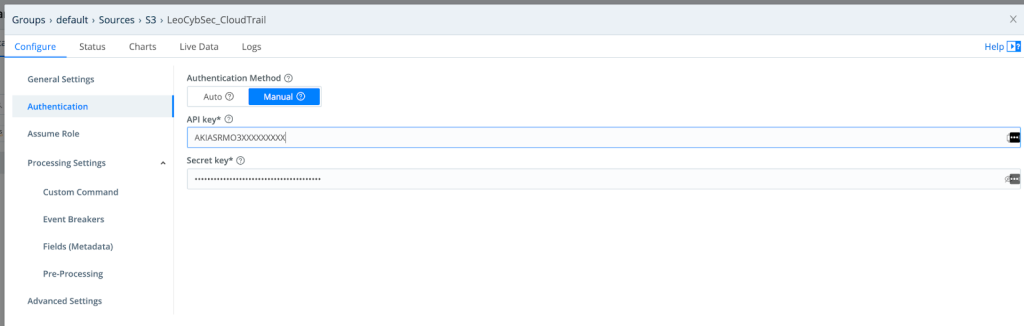

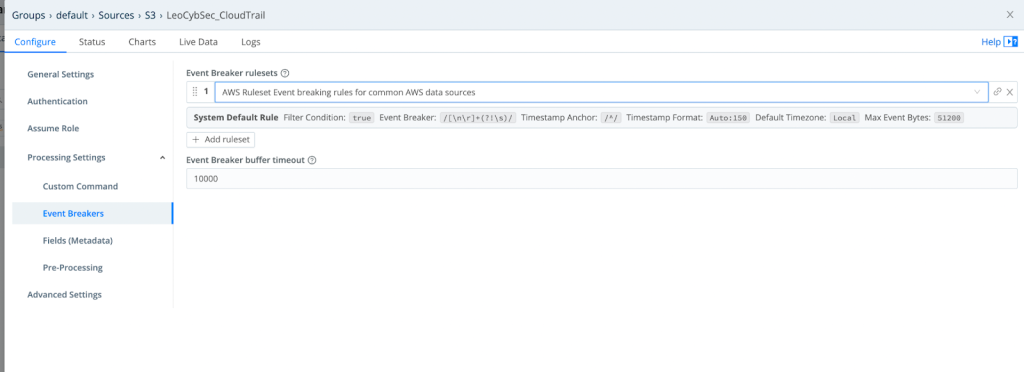

One of the many sources that Cribl LogStream supports is S3 over SQS which requires 3 LogStream UI steps to be enabled (account number and API key have been changed):

- Configure the input parameters

2. Enter the credentials (API key and secret key)

3. Enter the event breaker settings (LogStream has predefined rules for reading AWS logs)

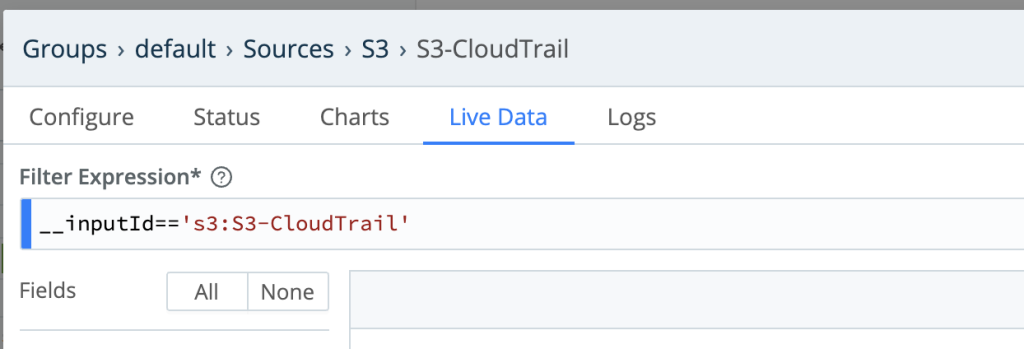

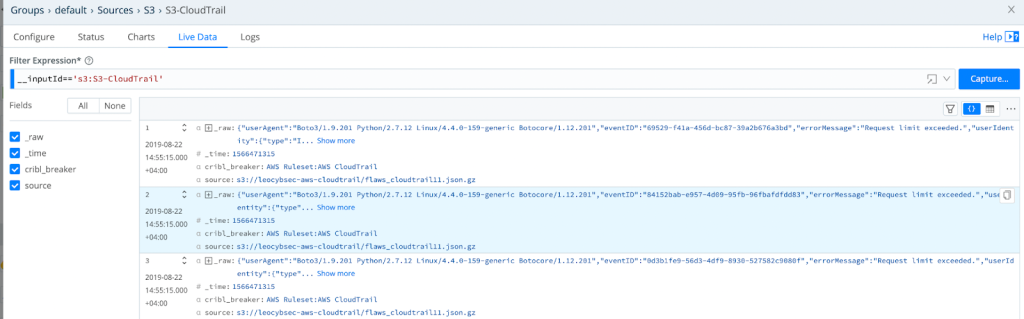

Based on the above, CloudTrail logs re getting to LogStream as shown in the image below;

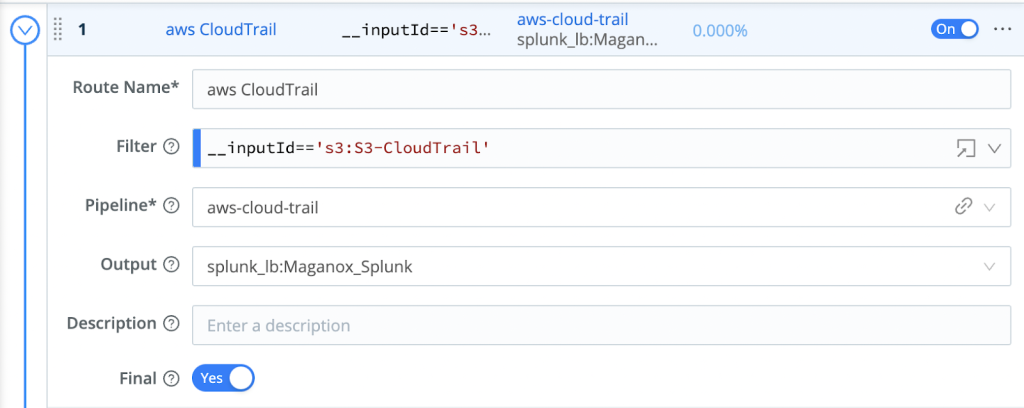

Create A Route In LogStream To Deal With CloudTrail Logs

The below route consists of a LogStream Pipeline to apply the processing on the CloudTrail Logs

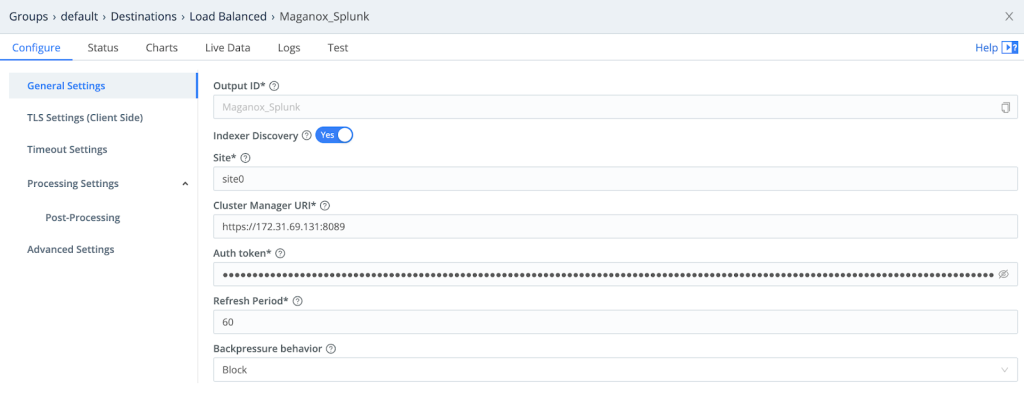

Create A Load Balanced Logstream Destination To The Splunk Cluster

The Log Sources

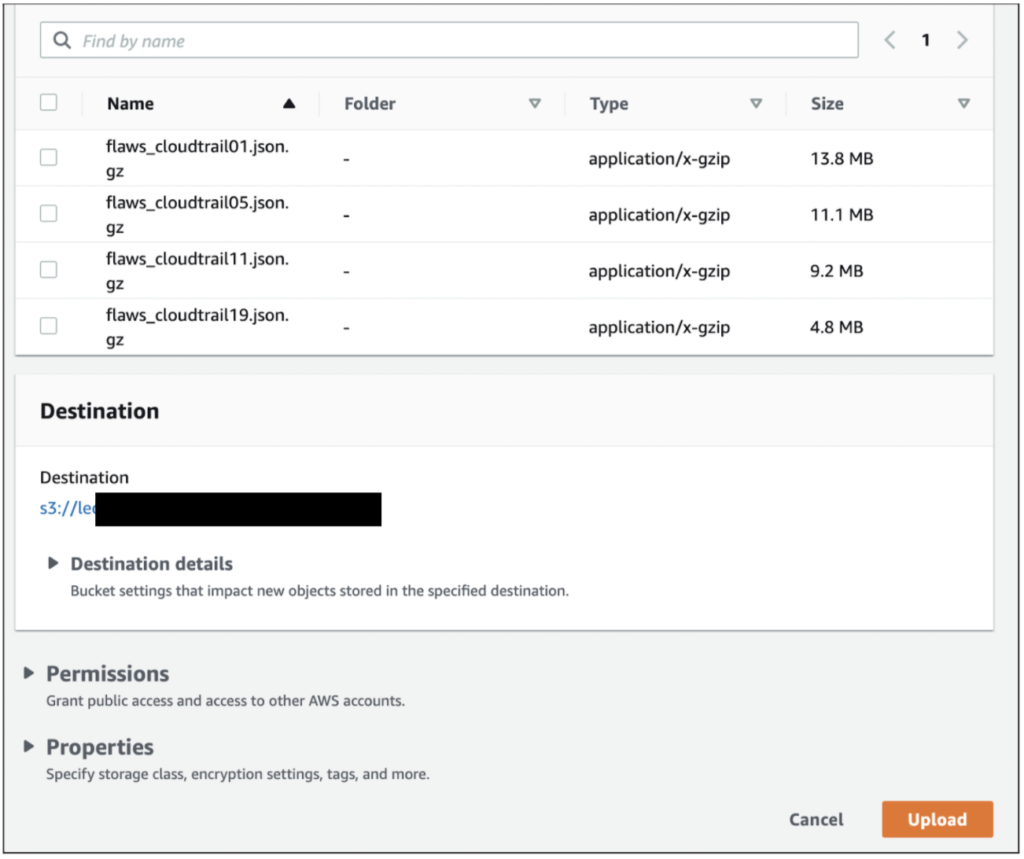

- We used log samples from the publicly available dump of cloudtrail logs from https://summitroute.com/blog/2020/10/09/public_dataset_of_cloudtrail_logs_from_flaws_cloud/

- We sent the same copy of logs via two routes as shown in the lab figure below:

- Upload the sample to S3 ⇒ SQS ⇒ Splunk Heavy Forwarder ⇒ Splunk indexers

- Upload the sample to S3 ⇒ SQS ⇒ LogStream worker ⇒ Splunk indexers

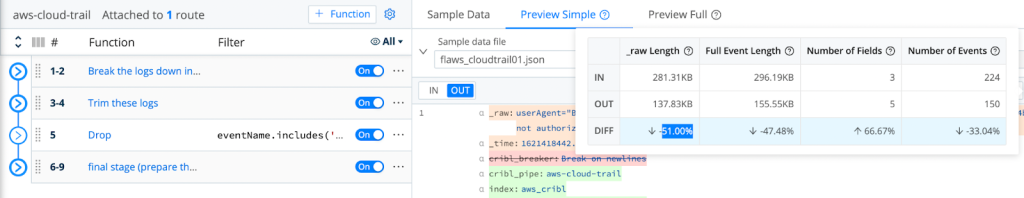

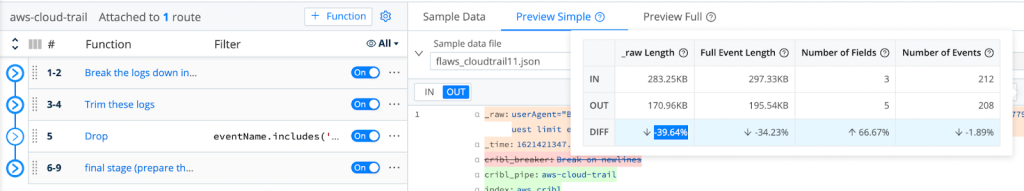

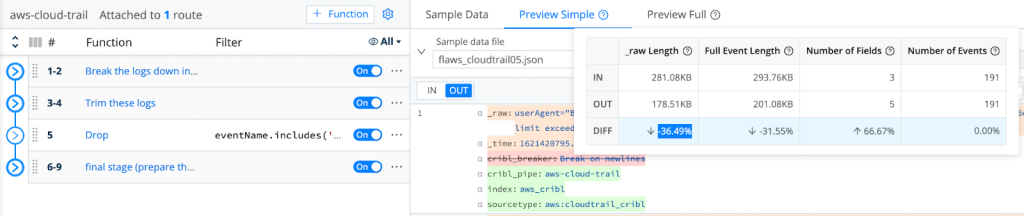

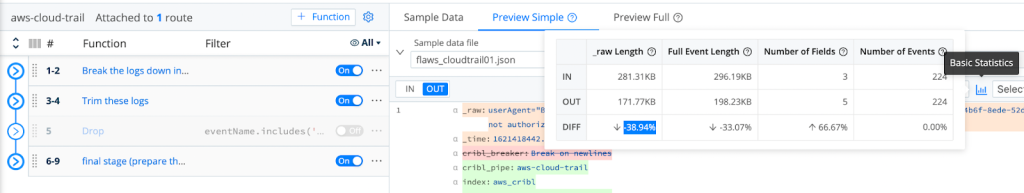

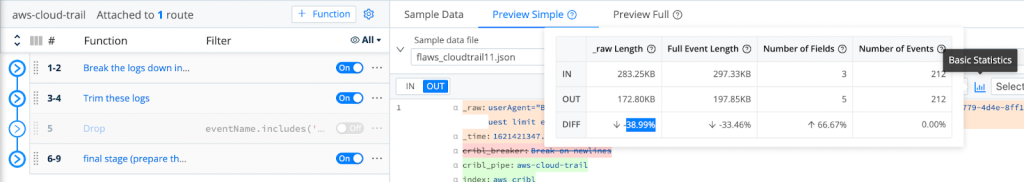

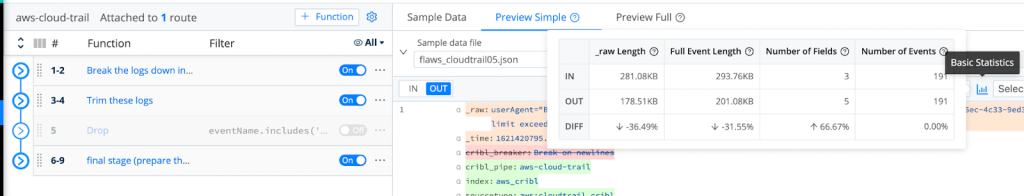

We used 4 samples from the publicly available CloudTrail logs:

- flaws_cloudtrail01.json.gz

- flaws_cloudtrail05.json.gz

- flaws_cloudtrail19.json.gz

- flaws_cloudtrail11.json.gz

Findings

Using Cribl LogStream

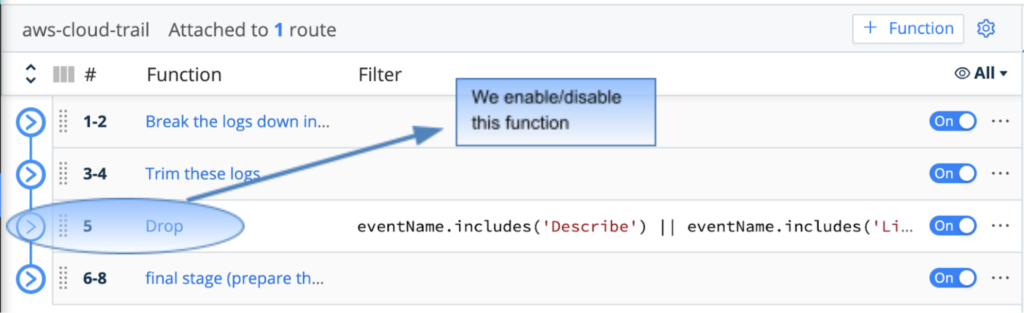

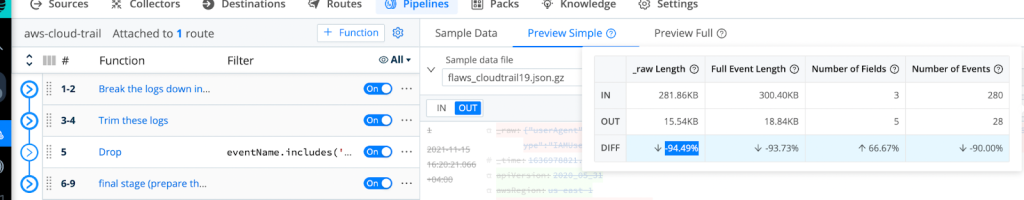

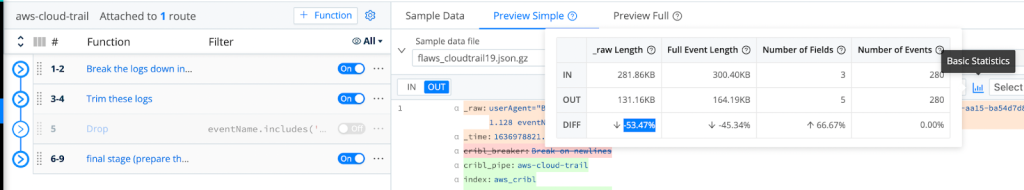

We used a mix of 9 functions divided into 4 groups as shown below to achieve reduction via:

- Simplifying the logs from a nested multilayer json into a key-value pair

- Renaming long names to short ones (abbreviated) and re-apply the mapping at Splunk

- Removing duplicates and fields that can be calculated

- Dropping non-security related events

In this pipeline we will demonstrate 2 reduction scenarios:

- Dropping all not security related events and applying event-level reduction

- Keeping all events and applying event-level reduction

Dropping Non-Security Events

One efficient way of trimming CloudTrail log size is by removing irrelevant events that do not contribute value to Security Assessments. In our scenario, “Describe*” and “List*” events were identified as unnecessary elements; therefore, we used Cribl to drop all events containing Describe or List as eventName.

After successfully dropping those events, we are able to demonstrate the achieved reduction by using LogStream’s Basic Statistics for the selected sample files:

As Shown Above, We Are Able To Achieve A Minimum 36.49% Reduction In Logs Volume!

Keeping Non-Security Events

In this case we keep the Describe and List events by disabling the drop function.

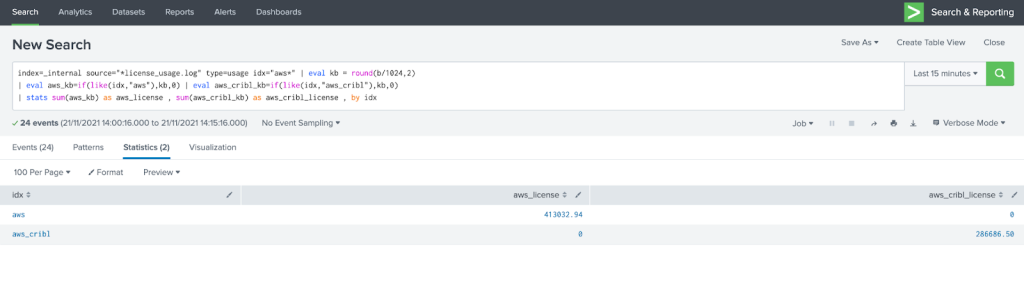

Using Splunk

To demonstrate the above findings using Splunk we push now the 4 samples into Splunk via 3 stages:

- On Splunk HF we enable SQS-based S3 input for CloudTrail logs

2. On LogStream we disable the SQS-based S3 input to ensure logs will only flow to Splunk HF

3. We upload the 4 sample files into the destination S3

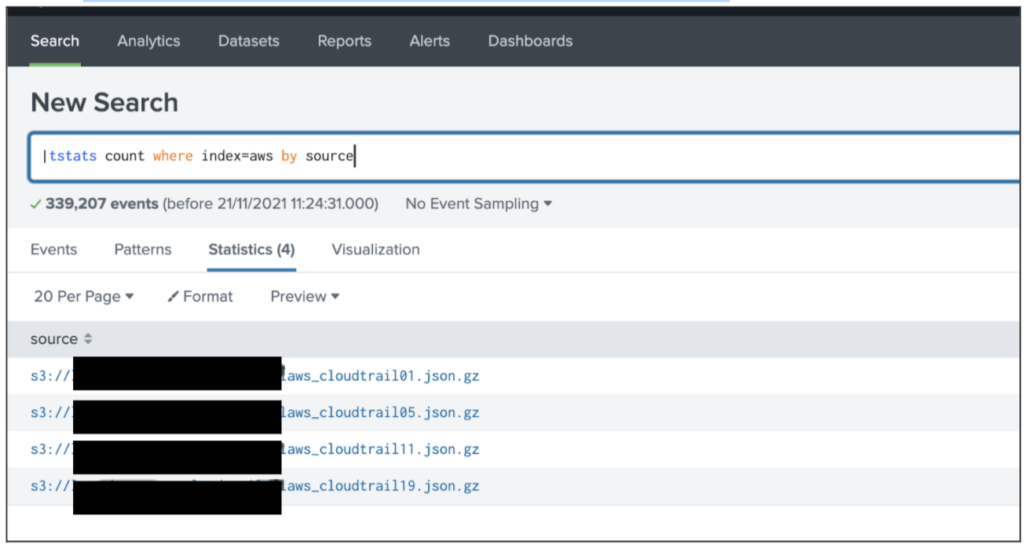

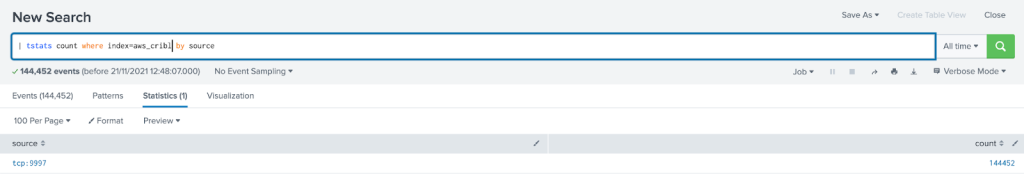

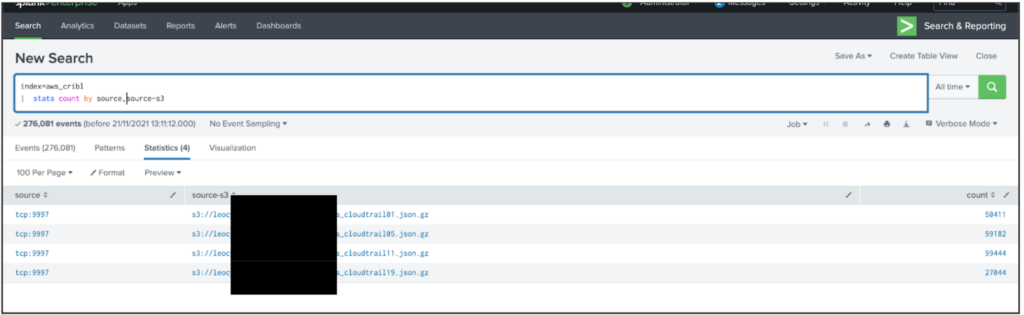

4. Verify the logs are arriving at Splunk under the AWS index

5. Disable the Splunk HF SQS-based S3 input and enable the LogStream source for SQS based S3

Enable The Drop Function

- At this stage we enable the drop function to remove all the Describe and List functions

- Delete all the files from the S3 and upload them again

- Validate that the logs are getting to LogStream

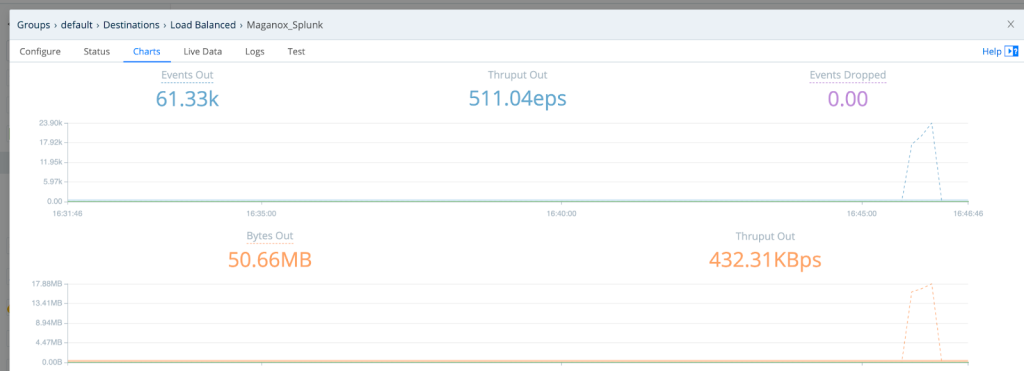

Validate that the logs are heading to the destination (Splunk)

Validate that the logs are reaching Splunk and are searchable

License Reduction

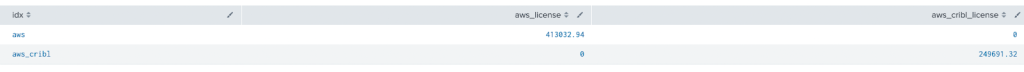

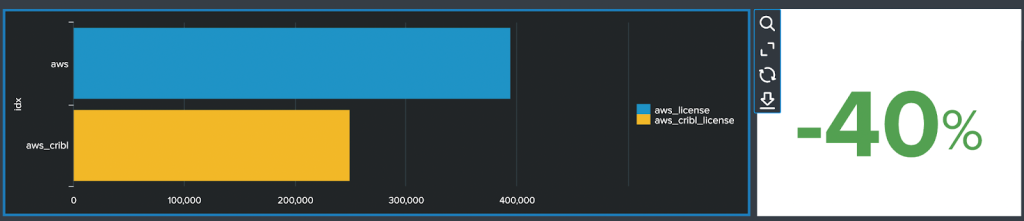

Now let’s compare the consumed license in Splunk from the 4 sample log files;

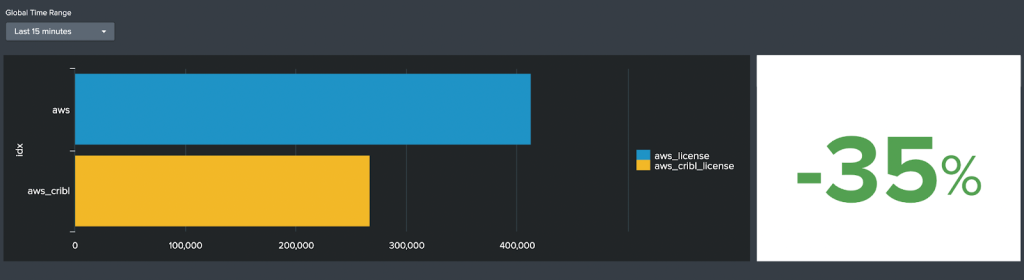

Disable The Drop Function

Same comparison but this time without the Drop Function enabled;

Conclusion

- Not all CloudTrail events are essential for security, and Cribl’s LogStream can help filter them either by dropping or by directing them to another destination

- Utilizing LogStream’s inherent capabilities, we are able to drop redundant fields and fields that can be calculated at Splunk side using lookups e.g. (we only send eventName and using lookups we calculate eventSource and eventType) these are static values of CloudTrail events. For example, in the table below we only send the 1st column values and use lookup to fill 2nd and 3rd ones.

| eventName | eventSource | eventType |

| AssumeRole | sts.amazonaws.com | AwsApiCall |

| AttachRolePolicy | iam.amazonaws.com | AwsApiCall |

| AttachVolume | ec2.amazonaws.com | AwsApiCall |

| ConsoleLogin | signin.amazonaws.com | AwsConsoleSignIn |

- We managed to achieve a license reduction between 35 and 40% following an effortless but powerful use of LogStream.

What other log sources do you want to see reduced with LogStream?

What other destinations do you want to reduce the logs ingestion volume to?

Please visit our website https://aps-leo-site-eycqb4ardjg3hkd7.uksouth-01.azurewebsites.net/ or drop us an email at info@leocybsec.com and will be more than happy to help and answer any questions.